Iterate your Image Training Set

Arguably the most tedious process when developing and training an AI model is tagging the training set of images. Every image needs to be inspected and then manually tagged: a repetitive, boring and time consuming task and often one of the first serious impediments to getting started with implementing AI in a manufacturing environment.

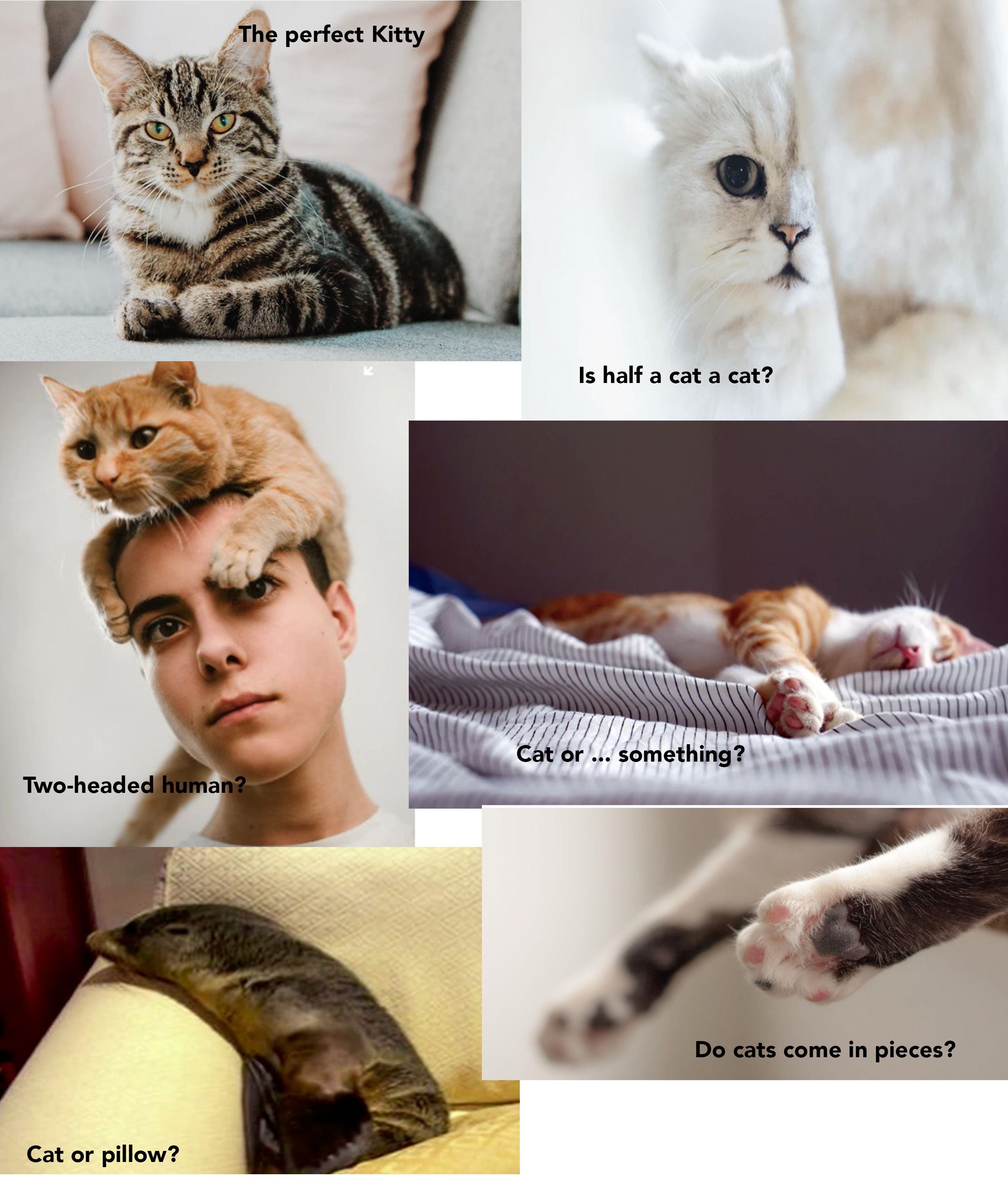

The story of ImageNet comes to mind in that context. ImageNet is the worlds first large database of tagged images: 1 billion images downloaded from the Internet, cleaned, sorted and labeled by almost 50,000 people in 167 countries using the Amazon Mechanical Turk platform. That wealth of images and information was used to teach computers to see the world like a small child learns to see: by seeing cats of all sorts in many different surroundings and poses. Also by looking at many images tagged with the label “cat” that cats come in an amazing variety of colors, shapes, situations, and environments and – importantly – that not everything that looks like a cat, really is a cat.

While it is easy for people everywhere to label cats, churches or computers, crowd funding is hardly the way to go when tagging images of your widgets during manufacturing. Too much specialized knowledge is required, even if we leave confidentiality concerns aside, The solution to the problem of tagging the training set is easy but unpleasant: DIY. One of the people on the QC team will have to take the time and go through the sets of images needed for training and tag the images.

Most people will feel that they could do better things with their time than labeling several 100s or even 1000s of images, like getting a root canal or walking over glass shards.

We also know that the more images and the more diverse a library of images is used for training, the more accurate the results will be.

Iterate the tagging Process

Though the tedious tagging process cannot be entirely avoided it can be shortened using the following iterative process.

- Start tagging with the minimum set of required images – the number can vary from mid double digits to a few hundred – images for each of the categories you want to detect, e.g. “good”, “defect 1”, “defect 2” etc.

- Keep 20% of the images aside as validation set

- Use the training set (80% of the images) to train the model

- Verify that you get reasonably good accuracy. You will probably not hit a home run based on so little training, but in QC and with a good training set the model should get north of 90% of the images correctly.

- Use the model to identify your next, larger set of images you want to use for training. The model won’t get them all right but the vast majority will be correctly tagged and so the process of tagging 100s/1000s of images by hand was just reduced to making sure that the model did a proper job and correcting the occasional mistake.

- Repeat again if needed until you reach the desired accuracy based on a large enough training set.

This process isn’t as painless as paying an army of “Mechanical Turks” to tag your images but beats having to do it all with in-house human resources.